Augmenting the dataset

Esther had an excellent model already, but she had the budget to experiment a bit more and improve its results.

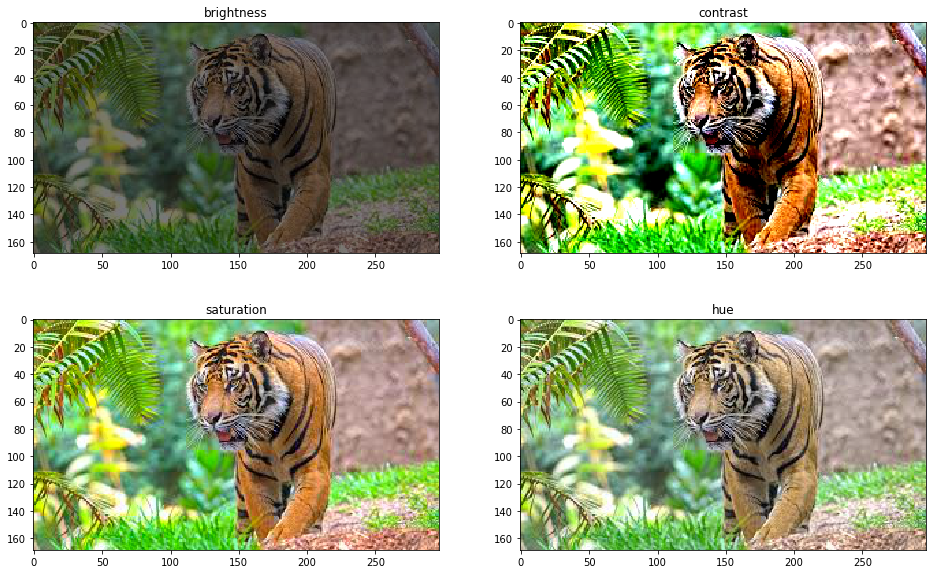

She was building a deep network to classify pictures. From the beginning, her Achilles’ heel has been the size of her dataset. One of her teammates recommended she use a few data augmentation techniques.

Esther was all-in. Although she wasn’t sure about the advantages of data augmentation, she was willing to do some research and start using it.

Which of the following statements about data augmentation are true?

Esther can use data augmentation to expand her training dataset and assist her model in extracting and learning features regardless of their position, size, rotation, etc.

Esther can use data augmentation to expand the test dataset, have the model predict the original image plus each copy, and return an ensemble of those predictions.

Esther will benefit from the ability of data augmentation to act as a regularizer and help reduce overfitting.

Esther has to be careful because data augmentation will reduce the ability of her model to generalize to unseen images.